import os

import requests

from urllib.request import urlopen

from bs4 import BeautifulSoup

import re

import pandas as pd14 Working with Data from the Web I

When you try to scrape or "harvest" data from the web it is useful to know the basics of html which is the markup language that is used to create websites. A browser interprets the html code and shows you the formatted website.

When we download information from the web, we usually download this source code and then run a parser over the html source code to extract information.

You can look at the source code with all the html-tags of a website in your browser. Simply right click (if on Windows) and click on view frame source or view page source, depending on your browser.

14.1 Beautiful Soup Library

The Python library BeautifulSoup helps us parsing out the information from the html-data that we get after downloading the web page. The additional library request allows us to download source codes from websites. We therefore first need to import these two libraries.

You may have to install chardet library first using

conda install charset-normalizer==3.1.0

The first package allows us to open web pages and the second, BeautifulSoup parses the html code and stores it in an easily accessible database format, or object. This object has methods that are tailor made to extract information from the html code of the website that we scrape from. The other two packages are Pandas and Regular Expressions. The latter is useful for pattern matching as you will see below. See also the chapter on Regular Expressions in these lecture notes.

14.2 Scraping Professor’s Website

If you scrape from any site, make sure you read the user guidelines, privacy documentation, and API rules, so that you do not run afoul of the law!

14.2.1 Introduction

In this project we will analyze the research of Professor Jung.

We start with downloading all workingpapers from the Professors research website. We will extract the title for each paper, the number of references, the citations, and the download link to the pdf version of the working papers. We then store everything in a Pandas dataframe for further analysis.

In a second step we will download all the working papers and save them to a local file directory called ‘paperdownload_folder’.

Third, we load each pdf article and extract the abstract information from the article and store that into a python list.

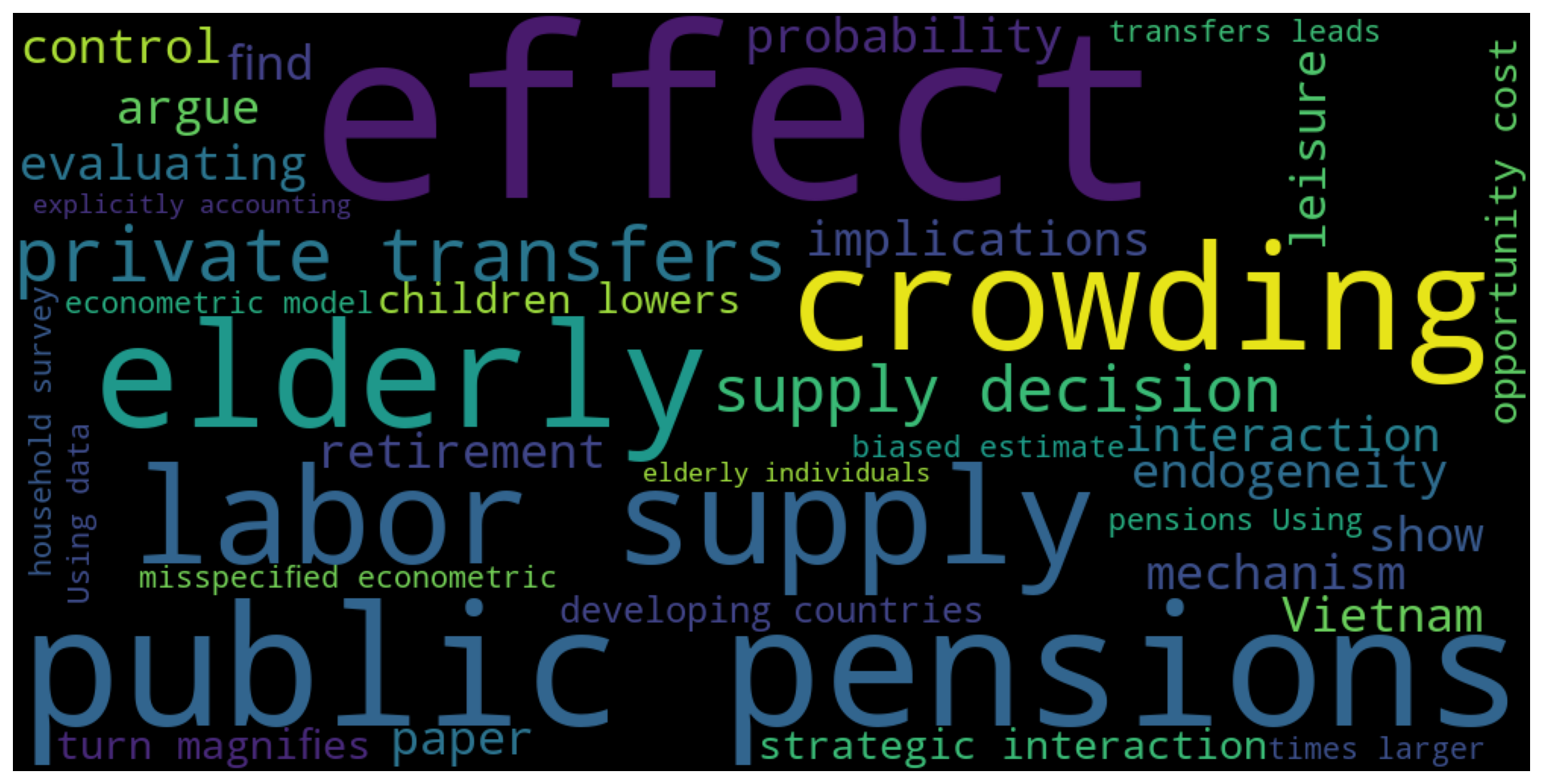

Finally, we will make a word cloud that shows the prevalence of certain nouns used in the article which will highlight the research interests of the professor.

We first define the Website URL and assign it to a variable.

# Define url to website

jurl = 'https://juejung.github.io/research.htm'We next open the website site and read the page's html code and assign it to the variable html.

html = urlopen(jurl).read()We then assign the html code to the BeautifulSoup data format which allows us to sort through the html code more systematically.

soup = BeautifulSoup(html.decode('utf-8', 'ignore'), "lxml")14.2.2 Extracting Information from html Code

Next look at the source code of the webpage (right click on the webpage and select View page source) and convince yourself that the links to the working papers all start with:

https://ideas.repec.orgIf you inspect this soup object it is a bit easier to see that all working papers are part of a link class that starts with:

<a >Where <a> is the anchor element that starts a section that contains a link. A typical html code with a link would look something like this:

<a href="http://example.com/">Link to example.com</a>where href stands for hyper reference (or link) and the </a> at the end of the line closes the link section. That is <a ...> is the opening tag and /a> is the closing tag.

Also note that the actual links to the working papers all start with the html tag:

https://ideas.repec.orgWe next extract all these a sections from the soup object. We limit the extraction of link sections to particular a sections that contain the actual links to working papers as opposed to other links that are also part of the html code of the professor’s research page. Since we observed above, that the links to the working papers all have the ideas keyword we will filter for these particular a sections next.

# Now select all links with the keyword "ideas"

linkSections = soup.select("a[href*=ideas]")We can now define an empty list so we can store all the links to the working papers and then run a loop through the html source code and extract all links that start with href="/ideas. We append all these links to the link_list list.

Let us test this first before we run a loop. Let's have a look at the first element of our extracted list.

print(linkSections[0])<a href="http://ideas.repec.org/p/tow/wpaper/2017-01.html">

Health Risk, Insurance and Optimal Progressive Income

Taxation</a>Next let us have a look at the link to the working papers in that section.

print(linkSections[0]['href'])http://ideas.repec.org/p/tow/wpaper/2017-01.htmlIf we combine this with https://juejung.github.io/research.htm that we have already stored in variable jurl we have the complete html link to the working papers that we can copy/paste into a browser.

link_list = []

for link in linkSections:

# Store all links in a list

newLink = link['href']

link_list.append(newLink)Let's print the first 5 entries of the list

print(len(link_list))

print(link_list[0:6])20

['http://ideas.repec.org/p/tow/wpaper/2017-01.html', 'http://ideas.repec.org/p/tow/wpaper/2020-04.html', 'http://ideas.repec.org/p/tow/wpaper/2020-02.html', 'http://ideas.repec.org/p/tow/wpaper/2016-02.html', 'http://ideas.repec.org/p/tow/wpaper/2020-03.html', 'https://ideas.repec.org/p/tow/wpaper/2016-16.html']14.2.3 Storing Information in a DataFrame

We then create an empty data frame that has the same number of rows as our list. In addition we add empty columns so that we can store the title, view, like and dislike information later on.

index = range(len(link_list))

columns = ['Links', 'Title', 'Citations', 'References', 'Download-Link']

df = pd.DataFrame(index=index, columns=columns)We next assign the link_list with all the working paper links to the dataframe.

df['Links'] = link_listWe then start the loop that runs through our list of working paper links and opens each one separately in a webpage. We then grab the title, number of references and number of citations, and pdf download link and store this information in the current row of our dataframe.

The following code might not run all the way through. The reason is that sometimes when you try to open a website with a crawler script, you may run into a server side issue where the website cannot be accessed for a split second in which case the line:

html = urlopen(df['Links'][i]).read()

in the script below will return an empty object. The script then tries to read from this object which then ends up in errors such as:

IndexError: list index out of range

We learn how to deal with such issues in the next section.

14.2.4 Extract the View Count for the First Working Paper

Let us now start with the link to the first working paper. We again read in the html code and decode it as a soup object.

i = 0

# Open first working paper link

html = urlopen(df['Links'][i]).read()

# Assign it to Soup object

soup = BeautifulSoup(html.decode('utf-8', 'ignore'), "lxml")We first extract the title. This is such a common thing that it is pre-programmed as a function in of the Beautiful Soup library.

# Extract info and store in dataframe

df['Title'][i] = soup.title.get_text()We are next looking for the information about the references counts. This requires a bit of detective work. After staring at the html code for a bit we find that the number of references is in an html block with the keywork id="refs-tab". We use this info and select the block of text where this occurs.

print(soup.find_all('a', id="refs-tab"))[<a aria-controls="refs" aria-selected="false" class="nav-link" data-toggle="tab" href="#refs" id="refs-tab" role="tab">34 References</a>]This returns a list, so let us just grab the content (first entry) of this list.

print(soup.find_all('a', id="refs-tab")[0])<a aria-controls="refs" aria-selected="false" class="nav-link" data-toggle="tab" href="#refs" id="refs-tab" role="tab">34 References</a>Now let us just get the text between the html tags using the get_text() function.

print(soup.find_all('a', id="refs-tab")[0].get_text())34 ReferencesOk, our number is in there. Let us now split this string into separate strings so that we can grab our number more easily.

print(soup.find_all('a', id="refs-tab")[0].get_text().split())['34', 'References']Almost there, grab the first element of this list.

print(soup.find_all('a', id="refs-tab")[0].get_text().split()[0])34This is still a string, so let us retype it as an integer number so we can assign it into our dataframe and do math with it.

print(int(soup.find_all('a', id="refs-tab")[0].get_text().split()[0]))34And finally stick it into our dataframe in the References column at row zero, which is the first row.

df['References'][0] = int(soup.find_all('a', id="refs-tab")[0].get_text().split()[0])Let us have a quick look at the dataframe to see whether it has been stored in the correct position.

print(df.head()) Links \

0 http://ideas.repec.org/p/tow/wpaper/2017-01.html

1 http://ideas.repec.org/p/tow/wpaper/2020-04.html

2 http://ideas.repec.org/p/tow/wpaper/2020-02.html

3 http://ideas.repec.org/p/tow/wpaper/2016-02.html

4 http://ideas.repec.org/p/tow/wpaper/2020-03.html

Title Citations References \

0 Health Risk, Insurance and Optimal Progressive... NaN 34

1 NaN NaN NaN

2 NaN NaN NaN

3 NaN NaN NaN

4 NaN NaN NaN

Download-Link

0 NaN

1 NaN

2 NaN

3 NaN

4 NaN 14.2.5 Extract the Citations and PDF download links

Next we do a similar procedure extracting the "Citations" information.

# Extracting number of citations

df['Citations'][0] = int(soup.find_all('a', id="cites-tab")[0].get_text().split()[0])ValueError: invalid literal for int() with base 10: 'Citations'The previous command could have thrown an error in case no citations were available yet. In this case the dataframe will just assign a nan.

Let us have a look at the dataframe again.

print(df.head()) Links \

0 http://ideas.repec.org/p/tow/wpaper/2017-01.html

1 http://ideas.repec.org/p/tow/wpaper/2020-04.html

2 http://ideas.repec.org/p/tow/wpaper/2020-02.html

3 http://ideas.repec.org/p/tow/wpaper/2016-02.html

4 http://ideas.repec.org/p/tow/wpaper/2020-03.html

Title Citations References \

0 Health Risk, Insurance and Optimal Progressive... NaN 34

1 NaN NaN NaN

2 NaN NaN NaN

3 NaN NaN NaN

4 NaN NaN NaN

Download-Link

0 NaN

1 NaN

2 NaN

3 NaN

4 NaN Finally, here is the entire code that extracts all the information for the working paper:

# First working paper i.e., first element of the Links column in dataframe

i = 0

# Open first working paper link

html = urlopen(df['Links'][i]).read()

# Assign it to Soup object

soup = BeautifulSoup(html.decode('utf-8', 'ignore'), features="lxml")

# Extract info and store in dataframe

df['Title'][i] = soup.title.get_text()

df['References'][i] = int(soup.find_all('a', id="refs-tab")[0].get_text().split()[0])

df['Citations'][i] = int(soup.find_all('a', id="cites-tab")[0].get_text().split()[0])

df['Download-Link'] = soup.find_all(string=re.compile(r'webapps'))[0]ValueError: invalid literal for int() with base 10: 'Citations'14.2.6 Extract Remaining Working Paper Info Using Loop

We finally scrape all the other working papers in the same way. In order to make this a bit nicer we simply put it into a loop.

for i in range(len(link_list)):

if i<5 or i>len(link_list)-5:

print('{} out of {}'.format(i, len(link_list)))

# Open first working paper link

html = urlopen(df['Links'][i]).read()

# Assign it to Soup object

soup = BeautifulSoup(html.decode('utf-8', 'ignore'), "lxml")

# Extract info and store in dataframe

df['Title'][i] = soup.title.get_text()

df['References'][i] = int(soup.find_all('a', id="refs-tab")[0].get_text().split()[0])

df['Citations'][i] = int(soup.find_all('a', id="cites-tab")[0].get_text().split()[0])

df['Download-Link'] = soup.find_all(string=re.compile(r'webapps'))[0]0 out of 20ValueError: invalid literal for int() with base 10: 'Citations'This may have resulted in an error if there was a server issues for one of the many working papers we tried to scrape. Do not worry. We will fix this now.

14.3 How to Deal with Errors

Sometimes a website is down or cannot be read for some reason. In this case the line in the above script that opens or loads the webpage, html = urlopen(df['Links'][i]).read(), may result in an empty object so that html would not be defined. The next line in the script above that uses the html object would then break the code and throw an error.

In order to circumvent that we could put the entire code-block into a try-except statement. In this case the Python interpreter will try to load the content of the website and extract all the info from the website. However, if the interpreter is not able to load the website then, instead of breaking the code, it will simply jump into an alternate branch (the Except part) and continue running the commands that are there.

for i in range(len(link_list)):

if i<5 or i>len(link_list)-5:

print('{} out of {}'.format(i, len(link_list)))

# Sometimes a website is down or cannot be read for some reason

# The

try:

# Open first working paper link

html = urlopen(df['Links'][i]).read()

# Assign it to Soup object

soup = BeautifulSoup(html.decode('utf-8', 'ignore'), "lxml")

# Extract info and store in dataframe

# We extract:

# Title of article

# Number of references used by workingpaper

# Number of citations of workingpaper

# Download URL (or link) to pdf so we can download pdf files later

df['Title'][i] = soup.title.get_text()

df['References'][i] = int(soup.find_all('a', id="refs-tab")[0].get_text().split()[0])

df['Citations'][i] = int(soup.find_all('a', id="cites-tab")[0].get_text().split()[0])

df['Download-Link'] = soup.find_all(string=re.compile(r'webapps'))[0]

except Exception as e:

print('Something is wrong with link {}'.format(i))

print('Probably a server side issue!')

# The next line prints the error message

print(e)0 out of 20

Something is wrong with link 0

Probably a server side issue!

invalid literal for int() with base 10: 'Citations'

1 out of 20

2 out of 20

Something is wrong with link 2

Probably a server side issue!

list index out of range

3 out of 20

4 out of 20

Something is wrong with link 13

Probably a server side issue!

list index out of range

Something is wrong with link 15

Probably a server side issue!

list index out of range

16 out of 20

Something is wrong with link 16

Probably a server side issue!

list index out of range

17 out of 20

18 out of 20

19 out of 20

Something is wrong with link 19

Probably a server side issue!

list index out of rangeIf you write your code in this way, your program will never break in case you hit a bad link. It will simply print the exception message and then continue with the next link from the link_list.

# Sort by impact measured by the workingpaper's citations

df.head()| Links | Title | Citations | References | Download-Link | |

|---|---|---|---|---|---|

| 0 | http://ideas.repec.org/p/tow/wpaper/2017-01.html | Health Risk, Insurance and Optimal Progressive... | NaN | 34 | http://webapps.towson.edu/cbe/economics/workin... |

| 1 | http://ideas.repec.org/p/tow/wpaper/2020-04.html | Healthcare Reform and Gender Specific Infant M... | 1 | 29 | http://webapps.towson.edu/cbe/economics/workin... |

| 2 | http://ideas.repec.org/p/tow/wpaper/2020-02.html | Health Risk and the Welfare Effects of Social ... | NaN | NaN | http://webapps.towson.edu/cbe/economics/workin... |

| 3 | http://ideas.repec.org/p/tow/wpaper/2016-02.html | Social Health Insurance: A Quantitative Explor... | 5 | 99 | http://webapps.towson.edu/cbe/economics/workin... |

| 4 | http://ideas.repec.org/p/tow/wpaper/2020-03.html | Coronavirus Infections and Deaths by Poverty S... | 14 | 16 | http://webapps.towson.edu/cbe/economics/workin... |

We finally sort the data according to number of citations, starting with the most cited working paper and print the first couple of entries:

In Pandas there was a change from df.sort() to df.sort_values(). Make sure you use the latter command.

# Sort by impact measured by the workingpaper's citations

df.sort_values('Citations', ascending = False).head()| Links | Title | Citations | References | Download-Link | |

|---|---|---|---|---|---|

| 8 | https://ideas.repec.org/p/tow/wpaper/2014-01.html | Market Inefficiency, Insurance Mandate and Wel... | 50 | 59 | http://webapps.towson.edu/cbe/economics/workin... |

| 6 | https://ideas.repec.org/p/tow/wpaper/2013-01.html | Fiscal Austerity Measures: Spending Cuts vs. T... | 17 | 26 | http://webapps.towson.edu/cbe/economics/workin... |

| 4 | http://ideas.repec.org/p/tow/wpaper/2020-03.html | Coronavirus Infections and Deaths by Poverty S... | 14 | 16 | http://webapps.towson.edu/cbe/economics/workin... |

| 17 | http://ideas.repec.org/p/tow/wpaper/2010-12.html | The Macroeconomics of Health Savings Accounts | 14 | 51 | http://webapps.towson.edu/cbe/economics/workin... |

| 7 | https://ideas.repec.org/p/tow/wpaper/2016-04.html | Aging and Health Financing in the U.S. A Gener... | 12 | 55 | http://webapps.towson.edu/cbe/economics/workin... |

14.4 Downloading the PDF files and Storing them on disk

In order to help with the PDF download we use the requests library. We also write a little helper function for the download that we define first.

# Helper function that will download a pdf file from a specific URL

# and save it under the name: file_name

def download_pdf(url, file_name, headers):

# Send GET request

response = requests.get(url, headers=headers)

# Save the PDF

if response.status_code == 200:

with open(file_name, "wb") as f:

f.write(response.content)

else:

print(response.status_code)Now we use the main script to generate the sub-directory for storing the articles.

# [2] Save pdfs in a subfolder called 'paperdownload_folder'

# ---------------------------------------------------------------

current_directory = os.getcwd()

final_directory = os.path.join(current_directory, r'paperdownload_folder')

if not os.path.exists(final_directory):

os.makedirs(final_directory)

# Define HTTP Headers

headers = {

"User-Agent": "Firefox/124.0",

}

# Run loop over all paper urls stored in our dataframe above

try:

for i in range(len(df)):

print("Downloading file: ", i)

# Define URL of a PDF

url = df['Download-Link'][i]

# Define PDF file name

file_name = final_directory + "/file" + str(i) + ".pdf"

# Download PDF

download_pdf(url, file_name, headers)

print('Download Complete')

except Exception as e:

print('Something is wrong with pdf link {}'.format(i))

print('Probably a server side issue!')

print(e)Downloading file: 0

Downloading file: 1

Downloading file: 2

Downloading file: 3

Downloading file: 4

Downloading file: 5

Downloading file: 6

Downloading file: 7

Downloading file: 8

Downloading file: 9

Downloading file: 10

Downloading file: 11

Downloading file: 12

Downloading file: 13

Downloading file: 14

Downloading file: 15

Downloading file: 16

Downloading file: 17

Downloading file: 18

Downloading file: 19

Download Complete14.5 Open PDF Files and Scrape Abstract for Word Cloud

We next load all the previously downloaded pdf articles and scrape them for the abstract info. We put all the abstract info into a list and then produce a word cloud to highlight the research interests of the professor.

We use the third party libraries pdfminer and WordCloud libraries that we need to install prior to using it.

# conda install -c conda-forge pdfminer.six

from pdfminer.high_level import extract_pages, extract_text

# [1] Open pdfs and scrape Abstract

# -----------------------------------------

nrArticles = len(os.listdir("paperdownload_folder"))

first = 'Abstract'

second = 'JEL'

abstractList = []

for i in range(nrArticles):

#print(i)

# Extract text from page 2 (the title page of the paper)

text = extract_text("paperdownload_folder/file"+str(i)+".pdf", page_numbers=[1])

# Cut text between 'Abstract' and 'JEL'

temp_text = text[text.find('Abstract')+8: text.find('JEL')]

# Clean text string

clean_text = temp_text.replace('\n', ' ').replace('ff', 'ff')

# Append to list

abstractList.append(clean_text)We finally produce the word cloud.

import matplotlib.pyplot as plt

# conda install WordCloud

from wordcloud import WordCloud

# [2] Make wordcloud out of Abstract text

# -----------------------------------------

# Convert list to string and generate

unique_string=(" ").join(abstractList)And for plotting we use the following code.

14.6 More Tutorials

Here are additional web tutorials about Python web scraping that you can check out:

- Scraping URLs from the Pycon 2014 Conference by Miguel Grinberg

- A youtube tutorial for web scraping with Python

- Dan Nguyens blog entry for scraping public data

- Scrape webpage with beautiful soup

- Data organization

- Scrape information from your favorite website